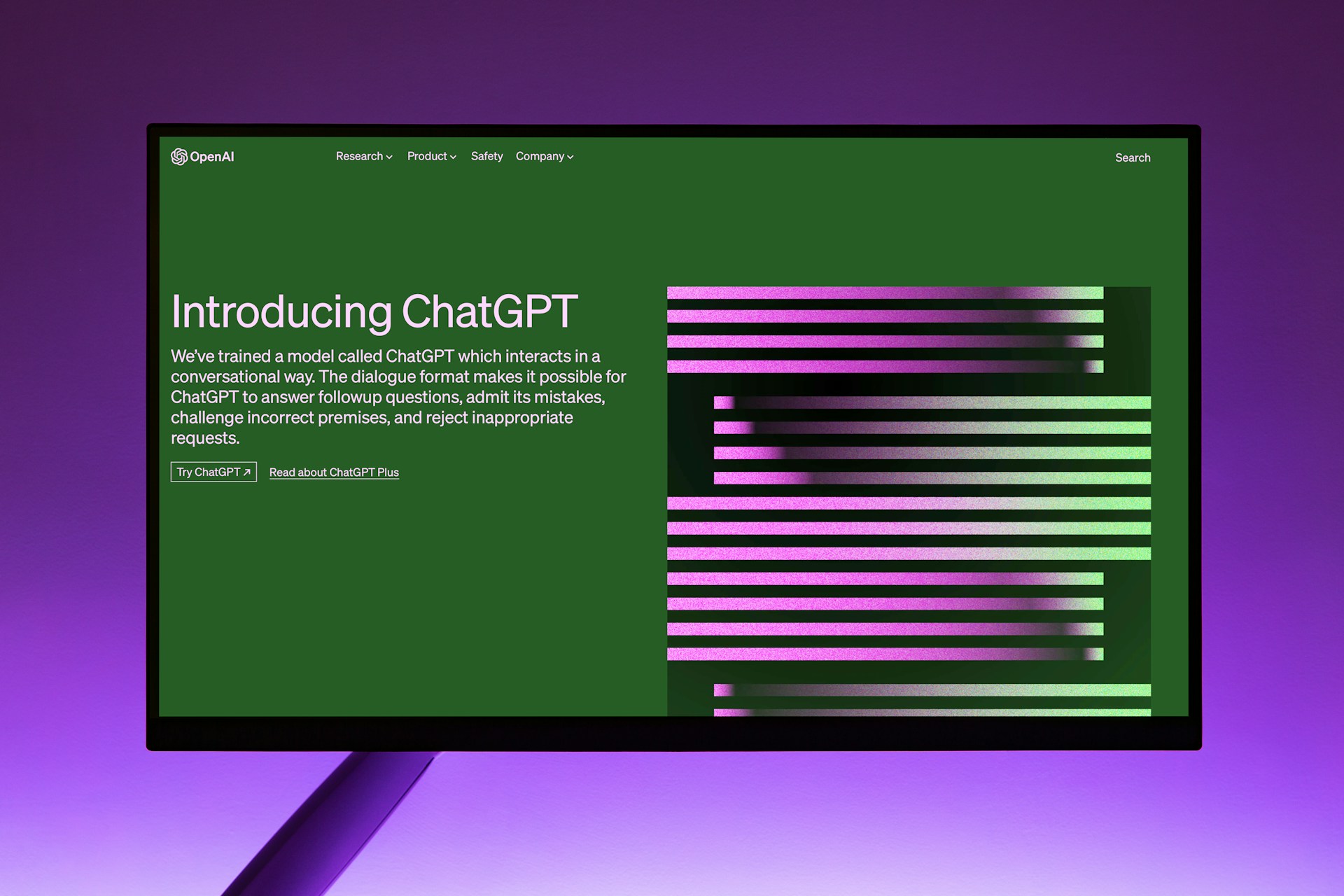

What happens when language—the very fabric of human thought—is no longer exclusive to humans? Enter GPT: Generative Pre-trained Transformer. It sounds clinical, almost forgettable. But behind that sterile acronym is a force reshaping how we read, write, and think. Like the printing press revolutionized the written word by democratizing information, GPT technology revolutionizes language itself by automating it.

Trained on oceans of text and fine-tuned to mimic our linguistic nuance, GPT models like OpenAI’s ChatGPT now craft essays, debug code, translate poetry, and ghostwrite love letters. A simple prompt is all it takes, and voilà—text emerges, fluent and eerily human.

Think of GitHub Copilot, a coding assistant that whispers entire functions into a programmer’s ear, or the content machines churning out newsletters, scripts, even pseudo-journalism. Are these tools, or collaborators? Assistants, or algorithmic auteurs? We’re not just using language models—we’re entering a dialogue with them.

The Anatomy of a Thinking Machine

If GPT is the new oracle, then what gears turn behind the curtain? To understand the mind of the machine, we must dissect its name. Generative. Pre-trained. Transformer. Each word is a clue to the architecture of artificial thought.

Generative is the showman of the trio. GPT doesn’t just recall—it creates. Feed it a prompt, and it doesn’t regurgitate past text but predicts what comes next, one word at a time, using probabilities honed over billions of examples.

It’s a statistical conjurer, offering not a single answer but an ocean of possibilities, weighted and chosen in real time. The results? Original poems, jokes, even code—all stitched together from the pattern-language of the internet. Are we witnessing creativity, or the ultimate act of remix?

Pre-trained is the memory—vast, synthetic, and indiscriminate. GPT models are trained on colossal datasets scraped from books, blogs, forums, and forgotten corners of the digital world. It is in this chaotic babel that the model finds order.

It learns not facts but patterns, not meaning but probability. Like a precocious child raised by the entire internet, it absorbs language structures before ever being asked to produce something meaningful. Is that wisdom—or just the illusion of it?

And then there is the Transformer, the silent architect. Introduced in 2017, this model broke from linear processing and embraced something more radical: self-attention. It allows the machine to weigh every word in a sentence in relation to every other word—simultaneously.

No longer bound by time’s arrow, it sees the sentence as a whole, not just as a sequence. It’s this leap—like the shift from typewriter to processor—that makes GPT more than a chatbot.

What happens when a machine begins to understand context better than we do? To fully grasp how this ‘thinking machine’ operates, it’s helpful to understand its foundations. GPT, which stands for Generative Pre-trained Transformer, represents a significant leap in neural network design, combining massive datasets with a unique architecture.

Reading Between the Tokens

What does it mean for a machine to “read” your words? When you type a question into a GPT model, you’re not speaking to it—you’re encoding a sequence of tokens. Words, punctuation, fragments of meaning—these are broken down into units smaller than the words themselves.

This is tokenization, the model’s version of literacy. It doesn’t see language as we do—it sees math. Each token becomes a number, a vector, a data point. In this way, language is stripped of romance and rendered computational. But isn’t that what computers have always done—transform the world into digits and digits into action?

Then comes the machine’s magic trick: self-attention. Unlike older models that trudged from word to word like readers of a teleprompter, the Transformer architecture allows GPT to consider every word in a sentence at once, weighing their relationships with uncanny precision.

The model learns that “bank” might mean money in one sentence and riverbend in another, depending on the company it keeps. Isn’t this ability to attend, to weigh meaning based on surrounding signals, eerily close to human inference?

Through this lens, GPT develops contextual understanding. Not understanding in the conscious, soulful sense—but a simulation of coherence so good it fools us daily. It remembers what you just said. It mimics nuance.

It even knows when to pause, to elaborate, to surprise. But is that understanding—or is it a hall of mirrors, reflecting back what we’ve taught it to expect? When a machine responds with uncanny relevance, are we seeing intelligence—or our own language refracted back at us?

To explore the contrast between traditional NLP and GPT’s transformer-based paradigm, read our cluster article on NLP vs. GPT: Architectural Innovations and Linguistic Evolution.

Teaching the Machine to Speak

How do you teach a machine to talk like us, think like us, even argue like us? You don’t just program intelligence—you train it. GPT models aren’t given a rulebook; they learn by devouring language itself.

Pre-training: The Great Absorption

First comes pre-training, an unsupervised feast. The model ingests massive swaths of text—Wikipedia entries, Reddit threads, novels, recipes, obituaries. No labels, no annotations—just raw, unfiltered human expression.

GPT doesn’t learn right from wrong; it learns patterns. Like a child who eavesdrops on every adult conversation but is never told what’s true, it picks up grammar, syntax, tone, rhythm. But is it truly understanding—or just performing an elaborate act of mimicry?

This process echoes the invention of the printing press: once rare texts became abundant, and knowledge moved from the hands of the elite to the masses. Now, GPT reads all of it, then learns to speak from the chorus.

Fine-Tuning: Giving Purpose to Prediction

But general knowledge isn’t enough. That’s where fine-tuning enters—a process akin to specialization. After pre-training, the model is further trained on smaller, curated datasets with labels. Here, the model is taught to translate French to English, summarize news articles, or answer legal questions with context-aware precision.

This is where GPT stops being a generalist and starts acting like a domain expert. Suddenly, a once-blank-slate model becomes a medical assistant, a coding partner, or a chatbot that mimics customer support reps—with alarming fluency.

Examples of Fine-Tuned GPT in Action

- Translation: Models fine-tuned on multilingual corpora rival traditional tools like Google Translate.

- Summarization: Long reports distilled into concise bullet points—perfect for the modern attention span.

- Q&A Systems: GPT becomes a knowledge oracle, answering questions with the calm certainty of a machine that never blinks.

Is this enhancement—or domestication? When we fine-tune a model, are we sharpening a tool—or narrowing an intelligence?

These training methodologies have underpinned a rapid advancement in AI capabilities. To understand how these concepts translated into increasingly powerful models, see the detailed evolution of the GPT series from version 1 to 4.1.

The Many Masks of GPT

What happens when language itself becomes automated? When the once-human domain of words—our most sacred tool for thinking, persuading, imagining—is delegated to a machine that never sleeps, never forgets, never doubts? GPT doesn’t just talk—it performs. And across industries, it’s already playing multiple roles, each more uncanny than the last.

Content Generation: Automation or Authorship?

From blog posts to novels, GPT is now co-author to a million unseen collaborations. Marketing teams deploy it to write product descriptions in seconds. Journalists use it to generate summaries or draft stories under deadline pressure. Novelists test plotlines and dialogue with a machine that can channel Hemingway one moment and cyberpunk the next.

But who holds the pen? Is this creativity, outsourced and stripped of ego? Or is it the beginning of a new genre—human-machine literature, where authorship is a shared hallucination?

Customer Service: The Polite Illusion of Empathy

Call a bank. Visit a tech support site. Chances are, your first interaction is with a GPT-powered assistant. These bots don’t just answer questions; they simulate empathy, escalate frustration gracefully, and perform the ritual of care—without ever caring.

- 24/7 Availability: No burnout, no overtime, just infinite politeness.

- Multilingual Mastery: Instant translation and seamless code-switching across global support systems.

- Scalability: One model, millions of users—an army of assistants with a single brain.

Is this efficiency, or emotional theater? Are we comforted, or simply pacified by a machine trained to sound like someone who understands?

Education and Research: The New Tutor, the Silent Co-Author

Students now use GPT to explain calculus problems, summarize dense philosophy texts, or brainstorm thesis topics. Researchers lean on it to draft literature reviews, translate academic jargon, or even write code for experiments.

- Personalized Learning: Adaptive explanations that adjust to the user’s level.

- Research Assistance: Rapid synthesis of complex ideas and cross-disciplinary connections.

But if the machine does the thinking scaffold, who is actually learning? When GPT drafts our thoughts before we’ve formed them ourselves, are we gaining insight—or outsourcing our intellect?

In this new paradigm, GPT is not just a tool—it is a voice, a mirror, a collaborator. And perhaps most provocatively, it is a question: what parts of ourselves are we willing to automate?

The Ghosts in the Machine

What do we risk when we teach machines to speak in our voice? With every new GPT iteration, the language gets smoother, the responses more convincing—but beneath the fluency lies a tangle of unresolved dilemmas.

Ethical Considerations: The Bias We Can’t Unteach

GPT models don’t invent language—they inherit it. And with it, they absorb the biases, stereotypes, and prejudices of their training data. Racism, sexism, misinformation—they’re not glitches; they’re echoes.

The model doesn’t know what’s right—it knows what’s common. Like early mass printing that spread both enlightenment and propaganda, GPT democratizes speech while amplifying its darkest frequencies.

- Misinformation: A confident tone can turn fiction into fact. Who verifies the machine?

- Privacy: Trained on publicly scraped data, GPT can unintentionally regurgitate traces of real conversations, emails, or documents. Where is the line between learning and surveillance?

Advancements: Toward Smarter, Smaller, Safer

Researchers now seek models that are not only larger but wiser. Efficiency is a frontier—making powerful models accessible without vast computing costs. Transparency is another—how do we understand why a model made a decision?

- Explainable AI: Peering inside the black box of algorithmic thought.

- Alignment: Teaching models not just to predict words, but to serve human values.

Can we build a language model that knows when not to speak? Or are we destined to chase sophistication while losing control of the voice we’ve given machines?

When Language Becomes Infrastructure

GPT is not just an AI milestone—it’s a shift in the architecture of thought itself. From tokenized whispers to transformer-powered dialogue, it’s reshaping how we create, communicate, and compute. Like the printing press redefined literacy, GPT reframes fluency as something shareable with machines. But this isn’t just about marveling at innovation—it’s about using it.

Writers, coders, educators, entrepreneurs—GPT is your collaborator now. Not a replacement, but a reflection. The question is no longer what can it do? It’s how will you use it? Or better yet—what will you build with a machine that speaks your language?